CodOpt: Enhancing Drug and Vaccine Development by Using Deep Learning and Natural-Language Processing to Optimize Recombinant Codon Sequences via a Host-Independent Data Pipeline

Abstract:

Bibliography/Citations:

- Mohammed N. Baeshen, Ahmed M. Al-Hejin, Roop S. Bora, Mohamed M. M. Ahmed, Hassan A. I. Ramadan, Kulvinder S. Saini, Nabih A. Baeshen, and Elrashdy M. Redwan. Production of Biopharmaceuticals in E. coli: Current Scenario and Future Perspectives. Journal of Microbiology and Biotechnology, 25(7):953–962, 2015. Publisher: The Korean Society for Microbiology and Biotechnology.

- Suliman Khan, Muhammad Wajid Ullah, Rabeea Siddique, Ghulam Nabi, Sehrish Manan, Muhammad Yousaf, and Hongwei Hou. Role of Recombinant DNA Technology to Improve Life. International Journal of Genomics, 2016:2405954, 2016.

- Arnold L. Demain and Preeti Vaishnav. Production of recombinant proteins by microbes and higher organisms. Biotechnology Advances, 27(3):297–306, May 2009.

- Evelina Angov. Codon usage: Nature’s roadmap to expression and folding of proteins. Biotechnology Journal, 6(6):650–659, 2011.

- P M Sharp and W H Li. The codon adaptation index – a measure of directional synonymous codon usage bias, and its potential applications. Nucleic Acids Research, 15(3):1281–1295, February 1987.

- Gila Lithwick and Hanah Margalit. Hierarchy of Sequence-Dependent Features Associated With Prokaryotic Translation. Genome Research, 13(12):2665–2673, December 2003.

- Vincent P. Mauro and Stephen A. Chappell. A critical analysis of codon optimization in human therapeutics. Trends in molecular medicine, 20(11):604–613, November 2014.

- Ming Gong, Feng Gong, and Charles Yanofsky. Overexpression of tnaC of Escherichia coli Inhibits Growth by Depleting tRNA2Pro Availability. Journal of Bacteriology, 188(5):1892–1898, March 2006.

- Vincent P. Mauro. Codon Optimization of Therapeutic Proteins: Suggested Criteria for Increased Efficacy and Safety. In Zuben E. Sauna and Chava Kimchi-Sarfaty, editors, Single Nucleotide Polymorphisms: Human Variation and a Coming Revolution in Biology and Medicine, pages 197–224. Springer International Publishing, Cham, 2022.

- Yann LeCun, Yoshua Bengio, and Geoffrey Hinton. Deep learning. Nature, 521(7553):436–444, May 2015. Number: 7553 Publisher: Nature Publishing Group.

Additional Project Information

Research Plan:

Rationale

My research focuses on codon optimization, a computational technique to improve the production of recombinant proteins. By addressing the limitations of common codon optimization techniques, my research can accelerate the development of crucial vaccines and pharmaceuticals produced through recombinant expression. This enhancement can save millions of lives, especially during outbreaks such as the COVID-19 pandemic that require the rapid design of therapeutics to fight disease.

Recombinant proteins underlie contemporary biotechnology and biomedicine. In biology, proteins are essential macromolecules with numerous roles, such as maintaining cell structure, transporting various molecules, and catalyzing biochemical reactions. Although all living organisms produce proteins naturally, specific proteins can be produced synthetically in host organisms such as bacteria and fungi.

As the primary mechanism for synthetic protein production, recombinant DNA technology underlies the discovery and development of medical treatments such as vaccines and pharmaceuticals. Recombinant proteins can be produced quickly, affordably, and safely and have vital roles in combating diseases. Numerous vaccines and pharmaceuticals produced with recombinant DNA technology, including COVID-19 vaccines and drugs that fight cancer, have saved millions of lives and enabled rapid improvements in human life.

Every protein corresponds to one gene within the DNA of an organism. Every gene consists of a sequence of codons, and every codon corresponds to an amino acid, the building block of proteins. In the cellular processes of transcription and translation, a cell follows a sequence of DNA codons to generate a sequence of amino acids that folds into a protein. Since there exist 61 non-stop codons and only 20 amino acids, some codons, called synonymous codons, encode the same amino acid.

Synonymous codons appear equivalent because interchanging them does not affect the sequence of amino acids defined by a gene. However, synonymous codons are not used equally in many organisms. Some codons are used far more than their synonymous alternatives, a phenomenon called codon bias. For example, the codons "CTG" and "CTA" both encode the amino acid leucine. In the genome of Escherichia coli, however, "CTG" occurs almost fifteen times as often as "CTA." Analysis of protein expression levels consistently reveals that rare codons such as "CTA" cause slowdowns in translation, hurting protein production.

Since codon bias affects protein expression levels, codon optimization seeks to optimize codon sequences to ensure maximal recombinant protein production. Among the sequence-dependent properties that affect recombinant synthesis, codon usage correlates most closely with protein expression levels. Therefore, optimizing the codons of a recombinant gene is essential to achieving efficient recombinant expression.

The most common algorithms for codon optimization replace each codon with a more frequent, synonymous alternative. Despite the enhancements offered by codon optimization, these standard techniques ignore the unanticipated consequences of eliminating rare codons. Using only frequent codons depletes the corresponding tRNA molecules, generating an unbalanced tRNA pool. This imbalance induces metabolic stress in the host cells and inhibits their growth. Furthermore, although rare codons can slow translation, these slowdowns are often essential to protein folding. Therefore, interchanging synonymous codons can induce protein misfolding, impairing protein stability and function. In medications, misfolded proteins harm patients by fostering anti-drug antibodies that counteract natural and recombinant proteins. When using codon optimization, however, researchers may only recognize the consequences of protein misfolding during late-stage clinical trials or after launching a medication. Therefore, algorithms for codon optimization must increase expression levels while maintaining the structural and functional integrity of recombinant proteins.

Deep learning can mitigate these challenges by understanding evolutionary details that contribute to both protein expression and protein stability. Deep-learning models extract features from data at multiple levels of abstraction. Although other artificial intelligence techniques require manual feature engineering, neural networks extract information directly from data and discover patterns that human analysis cannot detect. With these capabilities, deep-learning models could analyze large datasets of genomic DNA sequences and learn the contextual usage of different codons. Evolutionary pressures have tuned highly expressed genes to achieve both high expression and safe protein production. By understanding abstract features within these genes and learning to emulate their codon sequences, neural networks can optimize recombinant sequences to achieve the same levels of efficiency and safety. Therefore, deep learning can enhance recombinant expression to accelerate the production of crucial proteins such as vaccines and pharmaceuticals.

Since recombinant DNA technology underpins modern medicine and disease response, codon optimization with deep learning could aid patients around the globe who rely on recombinant therapies and save millions of lives when treatments must be designed quickly to fight epidemics and pandemics.

Research Questions

- How can deep learning amplify recombinant protein expression many times by emulating highly expressed genes to optimize codon sequences? Which sequence-to-sequence model architecture will best improve predicted protein expression?

- By learning evolutionary patterns embedded in high-expression genes and emulating them, how can deep learning address the drawbacks of common optimization techniques, such as metabolic stress and protein misfolding?

- How can a web application give researchers around the globe access to efficient codon optimization tools to accelerate vaccine and pharmaceutical development and save lives?

Hypotheses

- By learning to predict the genes for highly expressed natural proteins and applying this capability to recombinant proteins, deep learning can achieve the same expression levels as those of natural high-expressed genes in hosts. Furthermore, by avoiding tRNA imbalance and metabolic stress that damage host cells, neural networks can surpass the expression levels achieved by common optimization techniques.

- Evolution has embedded information in natural genes that ensures protein stability and prevents protein misfolding. Standard optimization algorithms, however, disregard this information because they cannot comprehend high-level abstractions. By emulating patterns embedded within these genes for safe expression by evolutionary pressure, deep learning can address the metabolic stress and protein misfolding that current solutions cause.

- An accessible and simple web application can provide global access to codon optimization tools based on deep learning. Since deep learning models are speedy, such a web application could quickly provide researchers with optimized sequences for different heterologous hosts. With these sequences, researchers could accelerate their work in developing and testing new medicines; labs and companies could accelerate the production of crucial recombinant proteins that save lives.

Engineering Goals

- The genomic data pipeline, from downloading genetic sequences to training the neural networks, should be entirely host-independent (all steps should be applicable to any host organism). With a host-independent pipeline, researchers could construct models for lesser known or specialized hosts.

- Multiple sequence-to-sequence neural network architectures should be trained and compared. A systematic comparison of network architectures can lay the foundation for future research to extend and improve the most performant model.

- The deep-learning models should avoid the common optimization technique of replacing all rare codons with frequent codons. Instead, during training, the models should learn the evolutionary phenomena that determine where rare codons are necessary in natural, high-expression genetic sequences. After training and testing, feature analysis should be performed to discern the features learned by the networks.

Expected Outcomes

- A host-independent data pipeline for creating codon optimization models based on deep learning using genetic sequencing data.

- Deep-learning models that successfully optimize codon sequences and improve the predicted protein expression of those sequences.

- Deep-learning models that avoid optimization strategies of replacing all rare codons and instead learn the evolutionary details that correspond to rare codon usage.

- Deep-learning models that successfully amplify real-world expression of recombinant proteins in laboratory tests.

Procedures

I will train neural networks to predict the codon sequences of highly expressed genes based on their amino-acid sequences. Then, I can predict optimized codon sequences for recombinant genes based on the corresponding proteins.

My data pipeline will be host-independent, so I will construct and test the pipeline using multiple common host species, including Escherichia coli, yeast (Saccharomyces cerevisiae), and Chinese hamster ovary cells (Cricetulus griseus).

Genomic Data Pipeline

- For each host, download all complete genomes available from the NCBI Assembly database to have a representative sample of strains used for recombinant expression.

- For popular hosts such as Escherichia coli, thousands of such genomes are available with millions of genes altogether. To reduce redundancy in the dataset, cluster the available genes using a sequence-analysis tool such as CD-HIT-EST, USEARCH, or VSEARCH. Store the centroid sequences in a FASTA file.

- Validate the remaining sequences by pruning ambiguous or invalid data.

- Remove sequences with ambiguous bases (for example, the IUPAC code "R" denotes a base that could be adenine, "A", or guanine, "G").

- Remove sequences with an incorrect start codon or stop codon. Since all genes consist of three-base codons, remove sequences whose lengths are not multiples of three.

- Using the metadata available in the Assembly FASTA files, remove pseudogenes, which do not encode proteins, and hypothetical proteins, which are expected to exist despite no direct evidence.

- Use the codon adaptation index (CAI) algorithm or the global codon adaptation index (gCAI) algorithm to predict the expression levels of the remaining sequences using their codon bias. Extract the top 10 or 20 percent of the sequences to use as a dataset of highly expressed genes for training.

Neural Networks

- Consider multiple architectures for sequence-to-sequence neural networks. After training the models, compare the architectures systematically to determine the most performant version.

- Convolutional neural networks (CNNs): consider a one-dimensional, sequence-to-sequence CNN inspired by the two-dimensional UNet architecture. Avoid pooling and transposed convolutional layers since the input sequences have varying lengths.

- Recurrent neural networks (RNNs): consider multiple RNN flavors, including standard RNNs, gated recurrent unit (GRU) networks, and long short-term memory (LSTM) networks.

- Transformers: consider a standard transformer with a multi-head attention layer.

- Train each model architecture on the dataset of highly expressed genes for each host, using the amino-acid sequences as input and the corresponding codon sequences as the expected output. Use the categorical cross-entropy loss function to evaluate the performance of each model. Continue the training procedure until the categorical accuracy increases negligibly between epochs.

- For each architecture, tune the available hyperparameters, including the dimensionality of the hidden layers, the encoding method (one-hot encoding or linear embedding), and the optimizer and learning rate.

- While training and tuning, save the model weights at each step.

- After tuning all architectures, compare the average gCAI achieved by each final model on the testing sequences. Determine the most performant model architecture, and save the model in the Open Neural Network Exchange (ONNX) file format.

- Evaluate the average GC content of the optimized sequences. The GC content of a genetic sequence equals the percentage of its bases that are guanine or cytosine. Values for GC content below 30% or above 70% can cause mRNA secondary structure formation that inhibits translation because of physical interactions between complementary bases. Therefore, the GC content for all optimized sequences should be between 30% and 70%.

Lab Testing

- To validate that the CodOpt models can significantly increase protein expression, compare the expression of a publicly available sequence for a recombinant protein with the expression of a sequence optimized by the most performant model.

- After expressing the original and optimized sequences in two separate cell colonies, use staining and gel electrophoresis to visualize the relative expression levels.

Web Application

- Use Python to build a web application that serves the models and allows researchers to request optimized sequences through an accessible interface.

- Use the ONNX file format to save the pretrained models, so future updates to the models can use other deep-learning frameworks for training.

- Through the application, allow researchers to:

- Select a host species for optimization (such as E. coli or yeast).

- Input a recombinant sequence for a vaccine, pharmaceutical, or other protein.

- Generate a sequence optimized by the most performant CodOpt model.

- Build a JSON API through which developers can integrate the CodOpt models into custom organizational data pipelines.

Results and Data Analysis

Categorical Cross-Entropy Loss

The categorical cross-entropy (CCE) loss function will be used to train the neural networks. CCE quantifies the difference between two probability distributions or between two sets of probability distributions. For codon optimization, the models will output one codon probability distribution for each input amino acid. This output probability distribution will be compared to the target probability distribution, which will contain a probability of 1 for the correct codon and a probability of 0 for the sixty-three other codons.

Let T and O be the target (determined from the dataset) and output (from the model) tensors. Each tensor has shape (I, L, 64), corresponding to I input sequences in one batch, L amino acids per sequence, and 64 codon probabilities per amino acid. The CCE loss function then equals

Codon Adaptation Index

The codon adaptation index (CAI) algorithm predicts protein expression by analyzing the codon bias of a genetic sequence. The algorithm requires a reference set of highly expressed genes. Using this reference set, a weight is calculated for each of the sixty-one non-stop codons, where codons that appear more frequently in the reference set receive higher weights. Then, the CAI of a genetic sequence equals the geometric mean of the weights of the codons in the sequence, with repeats for repeated codons.

Let S be a reference set of highly expressed genes, determined from expression data. For every codon c, let A(c) be the set containing all codons synonymous to c, including c itself. For any codon c', let f(c') be the number of occurrences of c' in S. Then, the weight w(c) for codon c is

Then, the gCAI for a sequence c with length n is the geometric mean of the n weights for the n successive codons in s,

Global Codon Adaptation Index

The global codon adaptation index (gCAI) algorithm extends the CAI algorithm. While the CAI algorithm requires a reference set of highly expressed genes, the gCAI algorithm constructs a reference set recursively from an entire genome using codon bias to predict expression. This difference is crucial because some recombinant hosts have no publicly available expression data, so a CAI reference set cannot be established.

Let S be a reference set of highly expressed genes, determined with the recursive gCAI algorithm. For every codon i, let Si be the subset of S containing all sequences that contain codon i. Let j be the amino acid corresponding to codon i. Then, let xi,j be the number of occurrences of codon i in S, and let yj be the maximum value of xi',j for all codons i' corresponding to amino acid j. Then, the weight wi,j for codon i is

Then, the gCAI for a gene g with length L is the geometric mean of the L weights for the L successive codons in g,

The models will be compared and evaluated according to the average gCAI of their output sequences. Since gCAI predicts the protein expression of a sequence, this comparison will reveal which model best improves protein production.

Wilcoxon Signed-Rank Test

A one-sided, Wilcoxon signed-rank test will be used to establish a significant difference between the gCAI values of the original sequences and those of the optimized sequences.

Let there be N sequences total. For sequence i, let x1,i be its original gCAI and let x2,i be its gCAI after optimization. Consider the absolute difference |x2,i - x1,i| for every sequence i, and rank these differences across all the sequences. Then, let Ri be the rank of |x2,i - x1,i| for every sequence i, where the smallest absolute difference receives rank 1 and the largest absolute difference receives rank N. Then, the test statistic for the Wilcoxon signed-rank test is

where sgn is the sign function, equalling -1 for negative numbers, 0 for 0, and 1 for positive numbers. The p-value can be determined by comparing the test statistic W to its distribution under the null hypothesis.

GC Content

The GC content of a genetic sequence equals the frequency of guanine and cytosine relative to all four bases. For a gene g, let A, C, G, and T be the number of occurrences of adenine, cytosine, guanine, and thymine in g, respectively. Then, the GC content of g is

Values for GC content below 30% or above 70% can cause the formation of mRNA secondary structures that hinder translation, hurting protein production. Therefore, the GC content of all optimized sequences will be measured to ensure that the models do not drastically change codon frequencies to affect GC content.

Feature Analysis

Feature analysis will be performed to ensure the CodOpt models successfully comprehend evolutionary phenomena that underlie rare-codon usage in high-expression genes. For recurrent networks such as RNNs, GRUs, and LSTMs, feature analysis can be performed by visually plotting the output of each recurrent unit of every recurrent cell, since each recurrent unit learns to identify a particular sequence property during training.

Risk and Safety

I will conduct all my data processing, model training, results analysis, and web-application development using my personal computer and cloud-computing environments. Therefore, there will be no major risks or hazards involved.

With the help of my mentors, an optimized sequence generated by my models could be expressed in Escherichia coli or another host through recombinant DNA technology. Such a procedure would occur in one of my mentors' labs. I would collaborate with my mentors to plan the procedure, but I would not be physically involved in its execution. The experiment would be conducted by lab professionals who have experience in lab protocols for recombinant expression.

Questions and Answers

1. What was the major objective of your project and what was your plan to achieve it?

Through my project, I aimed to develop a novel method for codon optimization.

Codon optimization is a strategy for improving the expression of recombinant proteins, which are proteins created in and extracted from host organisms for biomedical use. Recombinant proteins are crucial to modern biology and medicine, and they include vaccines and pharmaceuticals that combat disease and save lives amid disease outbreaks. To manufacture a recombinant protein, scientists must design a recombinant gene encoding the protein. In particular, for each amino acid within the protein, scientists must select one codon encoding that amino acid.

Research demonstrates that codons used frequently in a genome increase translation speeds for individual genes (since cells produce more corresponding tRNA molecules for ribosomes to use). Therefore, common codon optimization techniques remove all rare codons from recombinant genes and replace them with more frequent codons, with the intent of increasing translational efficiency. However, this strategy causes tRNA imbalance and even affects protein folding, since rare codons allow polypeptide chains to fold by momentarily slowing translation at specific moments. In host cells, tRNA imbalance causes toxic metabolic stress; in patients, misfolded therapeutic proteins cause the generation of anti-drug antibodies, which can inhibit patients' natural protein pools and cause dangerous side effects.

As I reviewed the challenges of common codon-optimization techniques, I realized that natural high-expression genes have been tuned by evolution for both efficient translation and safe protein production without misfolding. Deep learning models could learn evolutionary patterns from these genes to understand where rare codons must be used to preserve protein stability. Therefore, in my project, I sought to develop deep-learning models that could emulate natural high-expression genes to improve upon traditional codon optimization techniques.

I achieved this goal by first curating a dataset of highly expressed genes for three common heterologous hosts. Then, I trained multiple, sequence-to-sequence neural networks to predict the codon sequences for these genes from the corresponding amino acid sequences. I tested eight distinct model architectures, performing a systematic comparison of their performance and laying the foundation for future research extending these models. Having trained these models, I can apply them to protein production by inputting the amino-acid sequence for a recombinant protein and generating an optimized codon sequence that emulates the highly expressed genes of a host organism.

a. Was that goal the result of any specific situation, experience, or problem you encountered?

During the COVID-19 pandemic, I learned about the manufacturing of various vaccines, including mRNA-based and subunit-based ones, and how codon optimization drives the efficient production of such vaccines and other synthetic proteins. However, many optimization algorithms are relatively simple, so they can induce host-cell stress and protein misfolding by failing to consider the significance of individual codons. Based on my experience with deep learning, I understood that a neural network could learn hard-to-detect patterns and structures embedded in the genomes of heterologous hosts. By revising sequences to resemble the high-expression genes of these genomes, a neural network could enable efficient protein production without inducing harmful side effects. Thus, I researched how neural networks could optimize genetic sequences for heterologous expression by learning and emulating the codon-usage patterns embedded in hosts' genomes.

b. Were you trying to solve a problem, answer a question, or test a hypothesis?

Through my project, I worked to solve the problem of the limitations of commonly used codon optimization tools. Typical strategies for codon optimization replace all rare codons with frequent codons, since rare codons can slow down translation and thus hinder protein production. However, slowdowns in translation are necessary at points where a growing polypeptide chain begins folding into its final protein form. Therefore, removing all rare codons alters protein-folding dynamics, resulting in the production of misfolded proteins that can endanger patients. Furthermore, using frequent codons alone for recombinant expression can exhaust the corresponding tRNA pools. This depletion slows the production of all proteins, causing metabolic stress that damages and can kill host cells. With my research, I sought to solve these limitations in common codon-optimization techniques.

Through my research, I sought to answer three questions about the capabilities of deep learning for codon optimization: can deep learning achieve a balance between efficiency and safety in codon optimization and surpass the protein expression levels achieved by common optimization techniques? Can deep learning extract abstract evolutionary phenomena related to rare-codon usage from natural high-expression genes and reveal factors related to codon usage that researchers have not previously considered? Can a web application provide efficient optimization tools based on deep learning to researchers around the globe to accelerate the design and production of crucial vaccines and pharmaceuticals?

I sought to test multiple hypotheses regarding these questions. I believed that deep learning could successfully learn evolutionary patterns by training on large genomic datasets. I believed that experimentally verification with a real-world recombinant protein could demonstrate that deep learning can significantly improve protein expression.

2. What were the major tasks you had to perform in order to complete your project?

After my literature review, I identified deep learning as a solution to the limitations of conventional codon-optimization tools. After training with examples of input and output data, neural networks can comprehend abstract features embedded in large datasets. I recognized that, with this potential, deep learning models could learn the evolutionary phenomena that underlie contextual codon usage in natural genes. Since evolution has tuned natural genes for both translational efficiency and protein stability, I trained my neural networks to predict the codon sequences of highly expressed genes from the corresponding amino-acid sequences.

Since different heterologous hosts exhibit different patterns of codon bias, individual deep-learning models must be trained for individual host species. In my research, I created a host-independent pipeline, so researchers can utilize my algorithms to train models for specialized or less common species. To test and demonstrate my pipeline, I chose to train models for three common heterologous hosts: Escherichia coli, yeast (Saccharomyces cerevisiae), and Chinese hamster ovary cells (Cricetulus griseus).

To begin building my neural networks, I downloaded genetic sequencing data from the NCBI Assembly database. For each heterologous host, I downloaded all available genomes from the Assembly database in the FASTA file format (a genomic data format). For popular hosts such as E. coli, thousands of such genomes with millions of genes altogether are available. To reduce redundancy in the downloaded data, I performed distance-based greedy clustering to collect sequences with pairwise similarities above 90% together. I stored the centroid sequences, along with their associated metadata, in a new FASTA file. Finally, I validated the remaining sequences to prune sequences that contained ambiguous bases, had an invalid start or stop codons, or were pseudogenes.

To construct my final training dataset, I filtered the remaining sequences for those with high protein expression levels. To predict expression, I used the global codon adaptation index (gCAI) algorithm. I recursively traversed the validated sequences to calculate a weight for every codon according to the gCAI algorithm. Then, to calculate the gCAI for each codon sequence, I calculated the geometric mean of the weights for the sequence's codons. Research demonstrates that gCAI correlates well with protein expression, so I sorted the remaining sequences by their gCAI values. To curate a dataset of standardized, highly expressed genes, I collected the sequences with gCAI values above the 80th percentile.

After curating my training dataset, I considered multiple neural network architecture for translating amino-acid sequences to codon sequences. From my literature review, I identified multiple architectures specialized for sequential data processing, including a one-dimensional convolutional neural network with strides of one, multiple recurrent neural networks (including simple RNNs, GRU networks, and LSTM networks), and a transformer with multiple attention heads.

For each heterologous host, I trained each model using my dataset of highly expressed genes. During training, I offered amino-acid sequences as input and used the corresponding codon sequences as the expected output. The training sequences had varying lengths, ranging from fifty amino acids to thousands, so the training batches contained one sequence each. I used the categorical cross-entropy loss function to evaluate model performance during training, and I trained the models until the categorical accuracy increased negligibly between epochs.

As I trained the models, I tuned all available hyperparameters for each model architecture, experimenting with hundreds of specific neural networks. For each architecture, I tuned the size and number of hidden layers; larger hidden layers offered better performance but sometimes caused overfitting according to the validation metrics. I also experimented with multiple encoding methods for the input amino acids, including one-hot encoding and linear embedding. Finally, I experimented with the optimizer and learning rate for training, and I ultimately chose the Adam optimizer with a learning rate between 0.0001 and 0.00001 for all model architectures.

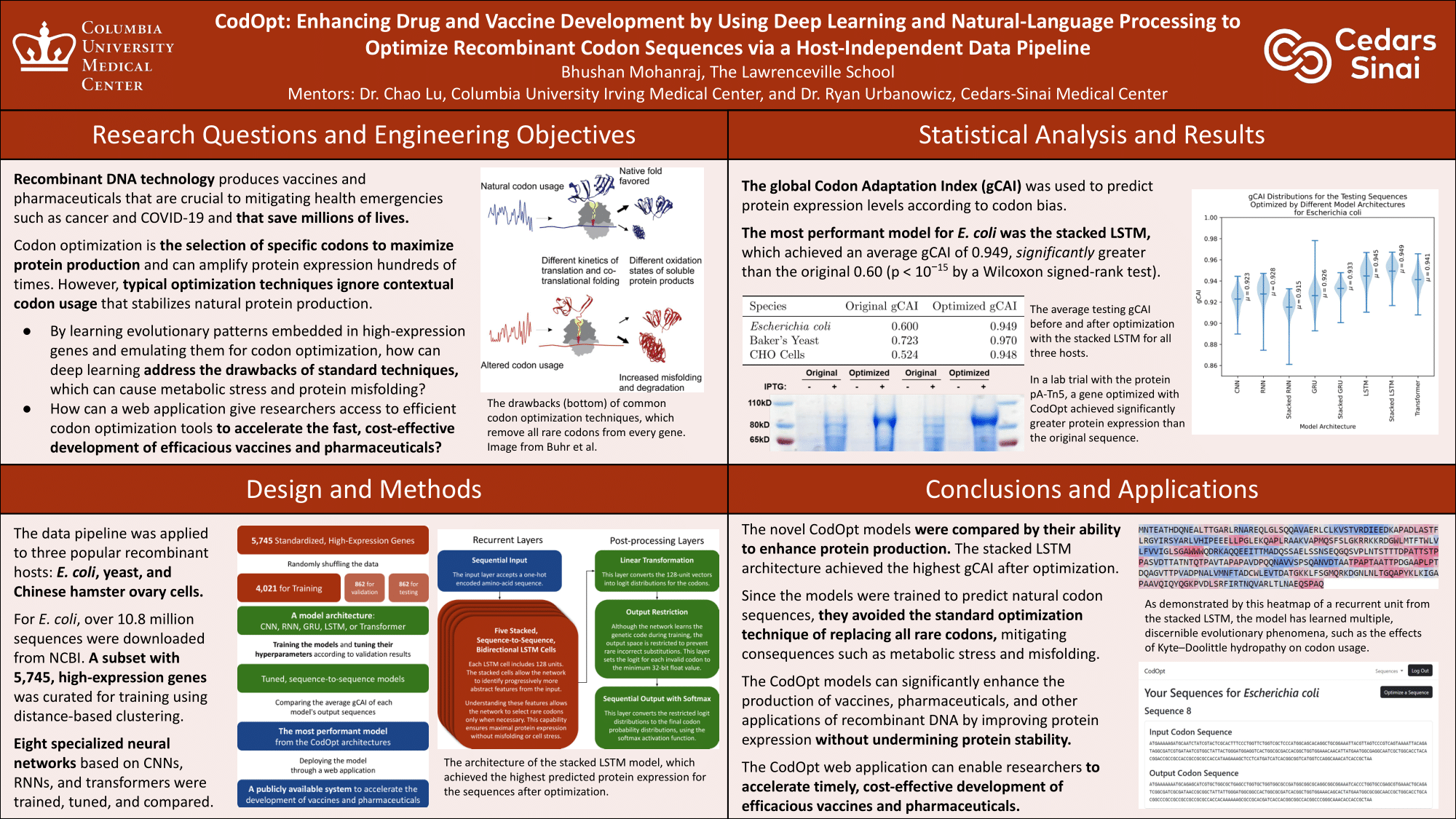

After training and tuning all the model architectures, I saved the final model for each architecture in the Open Neural Network Exchange (ONNX) file format. I compared the final models according to the average gCAI of the sequences optimized by each model. Since gCAI correlates closely with protein expression, this comparison distinguishes the models by their ability to improve protein production. I found that my stacked LSTM model achieved the highest average gCAI (0.949) on the testing dataset. With a one-sided Wilcoxon signed-rank test, I confirmed that this average was significantly greater than the average of 0.600 for the unoptimized sequences (p < 0.0001).

To confirm the efficacy of my models, I evaluated the GC content of the optimized sequences. The GC content of a genetic sequence equals the proportion of its bases that are either guanine or cytosine. Values for GC content below 30% or above 70% can cause the formation of mRNA secondary structures that hinder translation, hurting protein production. The GC content of all optimized sequences fell within the desired range of 30% to 60%, so my models did not drastically change codon frequencies to affect GC content.

After tuning the models and determining the most performant architecture, I used feature analysis to confirm that my models could recognize evolutionary phenomena that underlie rare-codon usage in highly expressed genes. The most performant model, the stacked LSTM, contained five bidirectional recurrent cells with 128 units each. During training, each unit of each cell learns to identify specific features and sequence properties. As the stacked LSTM model generated predictions for the testing sequences, I captured the output values from every recurrent unit and created output heatmaps. The heatmaps demonstrated that the stacked LSTM had learned multiple, discernible evolutionary phenomena that affect rare-codon usage, such as how many genes begin with rare-codon clusters that aid translation initiation.

To validate the CodOpt models experimentally, I worked with my mentor to express a recombinant protein in Escherichia coli cells, using both an original DNA sequence and a sequence optimized with CodOpt. The protein expressed was pA-Tn5, a fusion protein containing protein A and Tn5 transposase. pA-Tn5 is crucial to CUT&Tag, a high-resolution method for studying protein–DNA interactions.The original DNA sequence for pA-Tn5 was sourced from the plasmid repository Addgene, and the optimized sequence was created with the stacked LSTM. Both the original and optimized sequences were cloned into the same plasmid, and the plasmids were introduced into separate E. coli colonies. After expression and lysing, the pA-Tn5 proteins were isolated with gel electrophoresis and visualized with staining. Visibly, the optimized plasmid achieved significantly greater expression than the unoptimized plasmid. Therefore, the codon sequences optimized with CodOpt can significantly enhance protein expression.

Finally, I built a web application to provide researchers around the globe with the functionality of the CodOpt models. Using the app, researchers developing vaccines and pharmaceuticals can accelerate their work, broadening the impact of these crucial treatments. Researchers can log in to the application and select a host species, such as E. coli or yeast. When a researcher submits an input sequence, my application determines the amino acids for the corresponding protein using the genetic code. Then, my application loads the saved stacked LSTM model with the ONNX Runtime for Python and predicts a codon probability distribution for each amino acid in the sequence. Finally, the application returns the codons with the highest probabilities as the output DNA sequence. Some researchers or organizations may seek to integrate the models into their own pipelines. For this scenario, I built a JSON API, through which developers can request optimized sequences programmatically and forward the output to other applications or databases.

a. For teams, describe what each member worked on.

My project was not a team project.

3. What is new or novel about your project?

My project's application of artificial intelligence, host-independent data pipeline, systematic architecture comparison, and feature analysis are all novel developments not found in previous studies. Only one previous study from my literature review applied deep learning to codon optimization, and that study investigated a single model architecture rather than comparing multiple architectures in parallel. Furthermore, while other studies analyzed only E. coli, I created a host-independent data pipeline that researchers can extend for host species of their choice. Finally, I utilized feature analysis to demonstrate and confirm that neural networks can comprehend evolutionary phenomena that underlie rare codon usage in natural high-expression genes. These novel developments are explained in detail below.

a. Is there some aspect of your project's objective, or how you achieved it that you haven't done before?

To achieve my project's objective, I built and compared multiple neural networks inspired by natural-language processing.

In previous research projects, I constructed machine-learning models for analyzing tabular data and computer-vision models for analyzing medical imagery. However, I never constructed models based on natural-language processing architectures. Through this project, I learned about multiple new neural-network architectures and their applications for analyzing sequential data, including the capabilities of one-dimensional convolutional networks and the underpinnings of recurrent neural networks and transformers.

Furthermore, in previous projects, I built and tuned individual models to address particular tasks. Although my models' architectures evolved over time, I never created and compared multiple models with distinct architectures in parallel. Through this project, I conducted a systematic comparison of architectures to lay the foundation for future research that builds upon the most successful model.

Finally, although I have trained many neural networks in the past, I never used feature analysis to understand my models' decision-making and the features that my models recognize. Through this project, I used feature analysis to identify discernible patterns learned and recognized by my stacked LSTM model.

b. Is your project's objective, or the way you implemented it, different from anything you have seen?

During my literature review before beginning my project, I found only one published study that applied artificial intelligence to codon optimization. All other studies and online tools used standard techniques such as removing all rare codons and selecting codons randomly to match genomic codon frequencies.

The host-independent pipeline that I developed is novel. In published studies regarding codon optimization, data processing and analysis is performed almost exclusively for Escherichia coli. Although E. coli is a popular heterologous host, many researchers use other, more specialized species. For example, the Novavax COVID-19 vaccine is manufactured using moth cells, a relatively uncommon host. In my research, I developed a host-independent pipeline, through which researchers can develop optimization models for any host by using genomic sequencing data. Since I implemented the gCAI algorithm (an extension of the commonly used CAI algorithm), my pipeline does not require expression data that may not be available for uncommon host species.

Furthermore, my systematic comparison of neural network architectures for codon optimization is novel. The only published study from my literature review that used deep learning for codon optimization tested a single deep-learning architecture. In my research, I identified eight distinct architectures commonly used for sequence analysis, including the revolutionary transformer architecture that underlies ChatGPT and other modern breakthroughs in AI. I built models based on each architecture, and I tuned all eight models to compare their final performance. With this systematic analysis, I identified the stacked LSTM as the most successful architecture for codon optimization.

After comparing multiple model architectures, I novelly used feature analysis to understand the patterns used by my model when generating codon sequences. Although neural networks have transformed data science, their abilities to learn abstract features remains notoriously difficult for researchers to decipher. Feature analysis, however, can reveal the patterns that a neural network recognizes and utilizes to generate output. My most successful architecture was the stacked LSTM, which contains 5 successive LSTM cells, each with 128 units. By generating heatmaps to visualize the output of each unit, I identified multiple, discernible evolutionary details related to codon usage that my stacked LSTM learned during training, such as the clustering of rare codons at the beginning and ending of many genes.

c. If you believe your work to be unique in some way, what research have you done to confirm that it is?

I conducted an extensive literature review into studies that apply neural networks to genetic sequence analysis and, specifically, to codon optimization. Although neural networks have gained popularity, I found almost no research employing deep learning for codon optimization, and I found no research that attempted to construct a host-independent pipeline for practical usage by researchers. Furthermore, I found no study that systematically compared multiple architectures with different strengths for sequence analysis. Finally, I found no other studies that deployed such a system through a web application to demonstrate and enable practical application of the models.

4. What was the most challenging part of completing your project?

In the course of building my computational pipeline and training my models, I had some challenges with my dataset's size and my models' many configurations. The sequencing data I downloaded for Escherichia coli totalled ten million genes and 12 gigabytes, so I spent time considering numerous methods to reduce the dataset's size for training. Later, as I trained my models, I encountered some difficulties with tuning when, at first, I changed multiple hyperparameters at the same time, obscuring which hyperparameter changes improved my models' performance. These challenges are explained in detail below.

a. What problems did you encounter, and how did you overcome them?

When programming and testing my data pipeline, my primary hurdle was the vast amount of genetic sequencing data available for curating my training dataset. For Escherichia coli, the most extensively studied model organism, the Assembly database contained over 190,000 entries. Due to the size of this data, I downloaded only the complete genomes available in RefSeq, a database curated by the NCBI to have less redundancy than the general Assembly database. Still, this download consisted of over two thousand genomes with over ten million sequences, totalling almost 12 gigabytes. This dataset was far too large for training a neural network given timing constraints, so I considered techniques for reducing dataset size, such as random sampling.

I sought to filter my dataset of ten million genes to around fifty thousand genes. However, I feared that random sampling would produce a biased sample due to the small sample size. I realized that clustering would allow me to filter the dataset while verifiably extracting a representative sample. I researched multiple sequence analysis tools that supported clustering, including CD-HIT-EST, USEARCH, and VSEARCH, and I finally chose to use VSEARCH for its ease of installation and efficient clustering implementation. Clustering the ten million sequences required almost thirty-five hours of running VSEARCH, but I ultimately acquired a representative sample that was small enough to train my models.

Alongside some challenges when preprocessing my data, I had some difficulty tuning all my model architectures to evaluate and compare them fairly. Since many neural networks exist for sequence analysis, each with specific strengths and weaknesses, I sought to establish which architecture would best succeed at codon optimization. Thus, I constructed eight distinct networks and individually tuned their hyperparameters over multiple rounds of training. Difficulties arose because I initially chose to change multiple hyperparameters at the same time, preventing me from determining which hyperparameter had changed my model's performance. I then chose to change the hyperparameters one by one. Although this approach required more time, I successfully determined the best value for each hyperparameter to create the most performant models overall.

b. What did you learn from overcoming these problems?

In overcoming my difficulties with data preprocessing, I learned the value of continuously reviewing literature while conducting a project. I conducted a thorough literature review before beginning my project, but I did not consider challenges such as the magnitude of the NCBI data available. By returning to the literature after encountering this challenge, I identified clustering with VSEARCH as a potential solution. With VSEARCH, I attained a small but representative sample of the data I had downloaded for Escherichia coli. Therefore, continuing to review the literature while conducting a project can reveal new and effective solutions to novel challenges.

In overcoming my difficulties with hyperparameter tuning, I learned the importance of documenting and versioning changes when working on a computational project. By consistently documenting the performance of different models as I tuned them and continuously versioning my code, I found ease in organizing changes and determining which exact model architectures yielded the best performance. Therefore, consistent documentation and versioning can help organize and streamline a computational project.

5. If you were going to do this project again, are there any things you would you do differently the next time?

If I were beginning my project once more, I would spend more time reviewing literature related to large genomic sequencing datasets. My initial literature review focused on the biological underpinnings of codon optimization and the computational underpinnings of natural-language processing, but I assumed that I would not encounter any hurdles while preprocessing my data. By spending more time reviewing the tools available for processing and filtering large genomic datasets, I could avoid challenges related to the magnitude of the NCBI data available. Furthermore, I would invest earlier in computational resources available through Amazon Web Services (AWS). Through an AWS instance, I was able to run longer algorithms and train larger models than possible on my personal computer.

6. Did working on this project give you any ideas for other projects?

Working on this project has encouraged me to consider many options for future work.

In my research, I sought to optimize codon sequences that encode recombinant proteins. However, genes contain other regions that contribute to translation. For example, all genes begin with promoter sequences that encourage the initiation of translation. In future research, I could build deep-learning models to create optimal promoter sequences by emulating those before natural high-expression genes.

In my research, I created and trained all my neural networks from scratch. To improve performance in future iterations of CodOpt, I could use transfer learning and fine tuning to utilize the knowledge learned by other deep learning systems. For example, the innovative AlphaFold model, created by Google's DeepMind team, can accurately predict protein structure from amino-acid sequences better than any computational system in history. By using initial layers and pretrained weights from the AlphaFold model, my codon-optimization models could utilize the features and patterns that AlphaFold recognizes in amino-acid sequences. Alternatively, I could use output from AlphaFold as another input to my models, giving my models knowledge of where an amino-acid sequence will likely fold.

In my research, I successfully built a host-independent pipeline that enables researchers to construct models for uncommon heterologous hosts using only sequencing data. However, in some scenarios, full-genome sequencing may not be not available or obtainable. Transfer learning, which fine-tunes neural networks for new tasks, could solve this data disparity. Future research could use transfer learning to build networks for less common hosts by fine-tuning the ones trained for popular hosts.

In my research, I compared a sequence optimized with CodOpt to an unoptimized sequence by collaborating with my mentor to express both in E. coli. The evidence collected from the experiment demonstrates that CodOpt significantly increases recombinant protein expression. Further lab trials could verify this increase for multiple proteins and compare CodOpt to commercial optimization tools on metrics such as expression, protein folding, and protein solubility.

7. How did COVID-19 affect the completion of your project?

During the COVID-19 pandemic, I saw researchers around the world collaborate to develop vaccines and other therapeutics that have now saved millions of lives. Seeing the success of these efforts spurred my interest in vaccine and pharmaceutical development. In particular, I focused on the efficiency of vaccine manufacturing, as rapid vaccine design is crucial when responding to epidemics and pandemics such as COVID-19. As I researched the techniques used to produce vaccines, such as recombinant expression in heterologous hosts. I learned that codon optimization can drive efficient vaccine production by improving translational efficiency. I also learned, however, about the drawbacks of common optimization techniques and especially the dangerous consequences of generating misfolded proteins. As I considered how codon optimization (translating an amino-acid sequence to an optimal codon sequence) resembles the task of translating sentences between languages, I recognized that I could apply my skills in deep learning to address the consequences of common codon-optimization algorithms.