Understanding Dog Behavior through Visual and Aural Sensing Using Deep Learning

Abstract:

Bibliography/Citations:

[1] K. Ehsani, H. Bagherinezhad , J. Redmon, R. Mottaghi, A. Farhadi, Who let the dogs out? modeling dog behavior from visual data, CVPR, pp. 4051-4060, 2018.

[2] J. Hsu, “Dog cam” trains computer vision software for robot dogs, IEEE Spectrum, Apr 18, 2018.

[3] M. Marks, Q. Jin, O. Sturman, L. von Ziegler, S. Kollmorgen, W. von der Behrens, V. Mante, J. Bohacek, M. Fatih Yanik, Deep-learning-based identification, tracking, pose estimation and behaviour classification of interacting primates and mice in complex environments. Nat Mach Intell 4, pp. 331–340, 2022.

[4] J. Bryner, Brain scans reveal dogs' thoughts, Scientific American, May 8, 2022.

[5] Canine senses, Paws Chicago (https://www.pawschicago.org/news-resources/all-about-dogs/doggy-basics/canine-senses).

[6] J. Stromberg, Why scientists believe dogs are smarter than we give them credit, Vox, Jan 22, 2016.

[7] P. Agrawal, J. Carreira, and J. Malik, Learning to see by moving, International Conference on Computer Vision, pp. 37-45, 2015.

[8] A. Fathi, A. Farhadi and J. M. Rehg, Understanding egocentric activities, International Conference on Computer Vision, pp. 407-414, 2011.

[9] Y Y. J. Lee, J. Ghosh and K. Grauman, Discovering important people and objects for egocentric video summarization, IEEE Conference on Computer Vision and Pattern Recognition, pp. 1346-1353, 2012.

[10] S. L. Pintea, J. C. van Gemert, and A. W. M. Smeulders, Dej´ a` vu: Motion prediction in static images, European Conference on Computer Vision, pp 172–187, 2014.

[11] R. Gonzalez and R. Woods, Digital Image Processing, 3rd Edition, Pearson Prentice Hall, pp. 414-428, 1992.

[12] J. Kim and N. Moon, Dog Behavior Recognition Based on Multimodal Data from a Camera and Wearable Device, Applied Sciences, 12(6):3199, 2022.

[13] A. Hussain, S. Ali, Abdullah, H.-C. Kim, Activity Detection for the Wellbeing of Dogs Using Wearable Sensors Based on Deep Learning, IEEE Access, vol. 10, pp. 53153-53163, 2022.

[14] C.T. Siwak, H.L. Murphey, B.A. Muggenburg, N.W. Milgram, Age-dependent decline in locomotor activity in dogs is environment specific, Physiology & Behavior, 75(1-2), pp. 65-70, 2002.

[15] A. Quaranta, M. Siniscalchi, and G. Vallortigara, Asymmetric tail-wagging response by dogs to different emotive stimuli, Current Biology, vol. 17, no. 6, pp. 199-201, 2007.

[16] C.J. Völter, L. Lonardo, M.G.G.M. Steinmann, C.F. Ramos, K. Gerwisch, M.T. Schranz, I. Dobernig, L. Huber. Unwilling or unable? Using three-dimensional tracking to evaluate dogs' reactions to differing human intentions, Proceedings of the Royal Society, 290(1991), 2023.

[17] Y. Lecun, L. Bottou, Y. Bengio and P. Haffner, Gradient-based learning applied to document recognition, Proceedings of the IEEE, vol. 86, no. 11, pp. 2278-2324, Nov. 1998.

[18] J. Martinez, M. J. Black, J. Romero, On human motion prediction using recurrent neural networks, IEEE Computer Vision and Pattern Recognition Conference, pp. 2891-2900, 2017.

[19] J. Liu, A. Shahroudy, D. Xu, and G. Wang, Spatio-temporal lstm with trust gates for 3d human action recognition, European Conference on Computer Vision, pp 816–833, 2016.

[20] S. Venugopalan, M. Rohrbach, J. Donahue, R. Mooney, T. Darrell, K. Saenko, Sequence to Sequence – Video to Text, IEEE Conference on Computer Vision and Pattern Recognition, pp. 4534-4542, 2015.

Additional Project Information

Research Plan:

- Question or Problem being addressed

In this project, we propose to understand a dog’s behavior and reaction when being exposed to different stimuli, and use the observations made from animal intelligence to better understand human intelligence.

- Goals/Expected Outcomes/Hypotheses

The hypothesis is that the association exists between a dog’s behavior and what the dog sees and hears, and this association can be learned and understood. The purpose of this project is to understand and predict a dog's movements when exposed to visual and audio stimuli from an ego-centric perspective. Using deep learning techniques, we plan to learn and model the association between a dog's movement and video and audio signals sensed by the dog. These findings can enable more efficient ways of dog training. In addition, this project helps to better understand animal intelligence, which can potentially benefit the development of new AI technologies.

C. Description in detail of method or procedures

(The following are important and key items that should be included when formulating ANY AND ALL research plans.)

- Procedures: Detail all procedures and experimental design to be used for data collection

- Data Analysis: Describe the procedures you will use to analyze the data/results that answer research questions or hypotheses

We plan to conduct this research by analyzing visual and auditory information from the environment perceived by a dog.

A GoPro camera will be attached to the harness that the dog wears to capture what the dog sees and hears. In addition, a hand-held cell phone camera will record dog movements and the sounds in the environment. Data collection will focus on the dog’s surroundings and corresponding movements, from both the dog’s and human's perspective. The dog will be brought to different environments including parks and being walked down the street. Auditory stimuli will include the sounds of these environments.

Data analysis will focus on three parts. First, visual and audio information will be extracted from the video data, and alignment over time will be performed between the visual and audio information as well as between the GoPro and cell phone video data. Second, the visual data will be annotated to obtain the label of the dog’s movement. Third, a deep learning model will be built to learn the association between the dog’s movements and visual and audio information.

The project involves attaching a GoPro camera to the harness worn by one family dog (a female Golden Retriever). Videos are recorded while walking the dog. No experiments are performed on the dog.

Since the average dog has the intelligence of roughly a 2.5 year old human baby, we observe dog behavior as an entry point to understanding human behavior and intelligence in the long run.

Questions and Answers

1. What was the major objective of your project and what was your plan to achieve it?

The major objective of my project was to understand and model dog behavior to visual and audio stimuli. My plan was to collect video and audio data from a dog’s perspective and create a database of egocentric visual and audio stimuli that represents what a dog sees and hears. This involved mounting a GoPro camera on my family dog’s harness and taking her to neighborhood streets and parks to take recordings. Once the data was collected, I planned to use a deep learning approach to learn and model the association between a dog’s reaction and the visual and audio stimuli perceived by the dog. Finally, I planned to evaluate the model learned from the data and analyze experimental results to gain insight into how a dog behaves and reacts to the perceived visual and audio stimuli in its surroundings.

a. Was that goal the result of any specific situation, experience, or problem you encountered?

I’m very interested in studying artificial and natural intelligence. Currently, AI seems to make decisions based on large datasets, while humans are able to do the same on very few examples. This results in AI making mistakes that seem trivial to humans. For example, self-driving Teslas can misrecognize obstacles on the road which leads to fatal accidents. Last summer, I saw an interesting YouTube video of dogs reacting to realistic street art of a ditch in the road. Unlike AI, the dogs knew to stay away even though they had never seen it before. I wanted to find out what made AI and natural intelligence so different, and how we could apply natural intelligence to improve AI.

b. Were you trying to solve a problem, answer a question, or test a hypothesis?

Yes, I was trying to answer a question: how does a dog react to perceived visual and audio stimuli in its surroundings?

2. What were the major tasks you had to perform in order to complete your project?

First, I had to collect visual and audio data from a dog’s perspective. To do so, I mounted a GoPro on my dog’s harness and walked her in different neighborhoods and parks while recording what she sees and hears from an ego-centric perspective (dog view). I also recorded my dog and her surroundings with an external iPhone camera (human view).

After the videos were recorded, I separated the data into image frames and audio signals. I manually annotated and labeled the image frames with the dog’s corresponding actions. There were four actions: Sit, Stand, Walk, and Smell. I used OpenCV to extract and process image frames and audio signals from recorded videos, generating image frames from dog view and human view. I also aligned the timestamps of image frames and their corresponding audio signals based on an audible start sound. I also created motion fields using template matching.

Once the dataset of images, motion, audio, and ground truth labels were created, I used Tensorflow to implement an extended Convolutional Neural Network (eCNN) to learn a model that predicts the dog’s action from the image, motion, and audio features perceived by the dog.

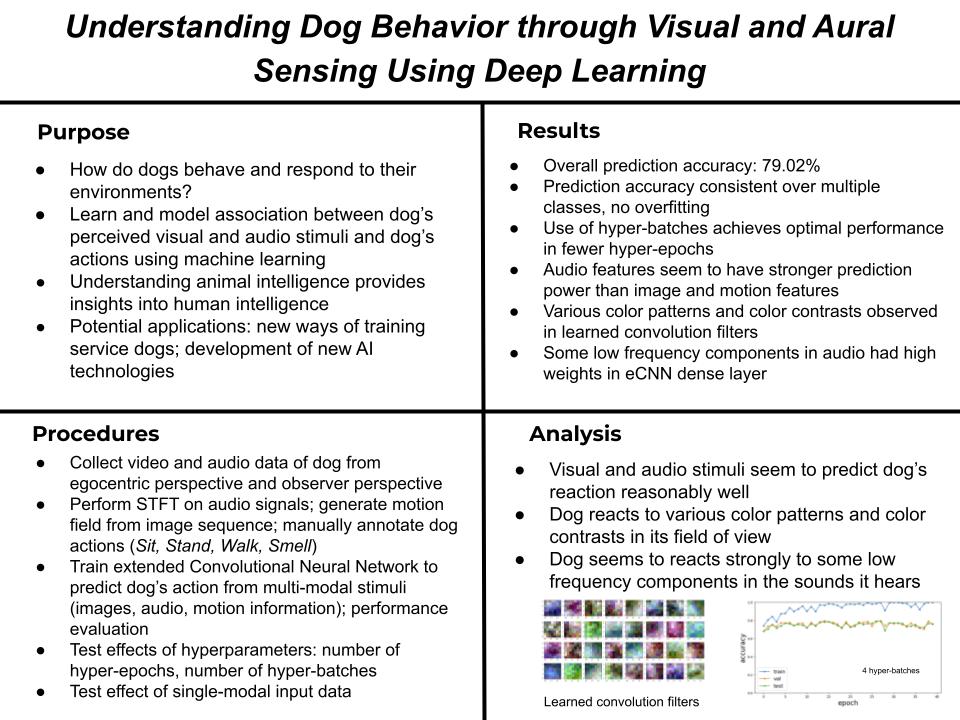

I ran experiments over different parameter settings such as number of epochs and batches and analyzed the classification results. I observed how a dog reacts to color patterns and different frequency components in what she hears.

a. For teams, describe what each member worked on.

This was an individual project.

3. What is new or novel about your project?

There are two novel ideas in my project. First, I study the dog’s behavior using data collected from a dog’s egocentric perspective. Previous work that studied animal intelligence monitors animal behavior from a human observer perspective. In my project, the data collection system was designed so the data reflects what the dog sees and hears. Second, previous work primarily used visual information of a dog’s surroundings. In addition to visual stimuli, I included audio stimuli perceived by the dog into the modeling framework. Third, I have proposed an extended Convolutional Neural Network (eCNN) model to utilize the multi-modal data.

a. Is there some aspect of your project's objective, or how you achieved it that you haven't done before?

This project is a completely new project. It is the first time I’m using deep learning and developing and implementing eCNN framework. It’s also the first time I’m using OpenCV to develop and implement image and audio features. I took deep learning courses on Coursera and self-studied OpenCV.

b. Is your project's objective, or the way you implemented it, different from anything you have seen?

To my knowledge, no one has incorporated audio stimuli into studying the behavior of dogs to their environment. Ultimately, the goal of this project is to understand natural intelligence, and it is important to include different sensing modalities to understand what the dog is reacting to.

c. If you believe your work to be unique in some way, what research have you done to confirm that it is?

I performed literature review and found that other researchers studying dog behavior relied on video footage alone to capture the dog’s behavior and surroundings. My project incorporates audio stimuli into the data and I observed that it plays a key role in how a dog behaves.

4. What was the most challenging part of completing your project?

There were two challenging parts of completing my project. One challenge was to have my dog cooperate for data acquisition and the other was overcoming the huge computation power required for training the deep neural network.

- What problems did you encounter, and how did you overcome them?

Initially, I used an Arduino board with motion sensors (IMU) attached to my dog’s legs to record her movements. However, it was difficult to get the dog to cooperate and get the sensors to stay with the dog. I had to change the design and replaced the motion sensors with an external mobile phone camera to record the dog’s movements. Then, I manually annotated and labeled the dog’s actions.

Training the eCNN model was time-consuming on my personal computer. I introduced hyper-batches to speed up the computation. It turned out that compared to using one big batch, using hyper-batches reached optimal performance much earlier with fewer hyper-epochs.

b. What did you learn from overcoming these problems?

In research work, it’s very likely to run into unexpected problems that require a change of plans. We need to stay creative and come up with alternative solutions to overcome these problems.

5. If you were going to do this project again, are there any things you would you do differently the next time?

If I were to do this project again, I would start my data analysis earlier before all the data collection was finished. In addition, I would collect more data.

6. Did working on this project give you any ideas for other projects?

Yes. A very interesting research idea stemming from this project is that we can use the same research framework to study how elderly people with cognitive decline react to various stimuli in their surroundings. The insights may help with designing patient-friendly living environments and effective therapy to slow down their mental decline.

7. How did COVID-19 affect the completion of your project?

COVID-19 did not affect the completion of my project.