Semi-Supervised Pulmonary Auscultation Analysis with Cross Pseudo Supervision

Abstract:

Bibliography/Citations:

M. Sarkar, I. Madabhavi, N. Niranjan, and M. Dogra, “Auscultation of the respiratory system,” Annals of Thoracic Medicine, vol. 10, no. 3, pp. 158–168, 2015.

B. Wang et al., “Characteristics of Pulmonary Auscultation in Patients with 2019 Novel Coronavirus in China,” Respiration, vol. 99, no. 9, pp. 755–763, 2020, doi: https://doi.org/10.1159/000509610.

V. Abreu, A. Oliveira, J. Alberto Duarte, and A. Marques, “Computerized respiratory sounds in paediatrics: A systematic review,” Respiratory Medicine: X, vol. 3, p. 100027, Nov. 2021.

J. Heitmann et al., “DeepBreath—automated detection of respiratory pathology from lung auscultation in 572 pediatric outpatients across 5 countries,” NPJ Digital Medicine, vol. 6, no. 1, Jun. 2023.

A. Tarvainen and H. Valpola, “Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results,” Neural Information Processing Systems, 2017.

Z. Ke, D. Qiu, K. Li, Q. Yan, and R. Lau. “Guided collaborative training for pixel-wise semi-supervised learning,” in European Conference on Computer Vision (ECCV), 2020.

X. Chen, Y. Yuan, G. Zeng and J. Wang, "Semi-supervised semantic segmentation with cross pseudo supervision," in 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, pp. 2613-2622, 2021.

S. Rietveld, M. Oud, and E. H. Dooijes, “Classification of asthmatic breath sounds: Preliminary results of the classifying capacity of human examiners versus artificial neural networks,” Computers and Biomedical Research, vol. 32, no. 5, pp. 440–448, Oct. 1999.

M. Aykanat, Ö. Kılıç, B. Kurt, and S. Saryal, “Classification of lung sounds using convolutional neural networks,” EURASIP Journal on Image and Video Processing, vol. 2017, no. 1, 2017.

T. Fernando, S. Sridharan, S. Denman, H. Ghaemmaghami and C. Fookes, “Robust and interpretable temporal convolution network for event detection in lung sound recordings,” in IEEE Journal of Biomedical and Health Informatics, vol. 26, no. 7, pp. 2898-2908, 2022.

F.-S. Hsu et al., “Benchmarking of eight recurrent neural network variants for breath phase and adventitious sound detection on a self-developed open-access lung sound database—HF_Lung_V1,” PLOS ONE, vol. 16, no. 7, p. e0254134, Jul. 2021.

D. Chamberlain, R. Kodgule, D. Ganelin, V. Miglani and R. R. Fletcher, “Application of semi-supervised deep learning to lung sound analysis,” 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 2016.

R. Lang, Y. Fan, G. Liu, and G. Liu, “Analysis of unlabeled lung sound samples using semi-supervised convolutional neural networks,” Applied Mathematics and Computation, vol. 411, p. 126511, 2021.

N. Turpault, R. Serizel, A. Shah, and J. Salamon, “Sound event detection in domestic environments with weakly labeled data and soundscape synthesis,” In Workshop on Detection and Classification of Acoustic Scenes and Events, New York City, USA, 2019.

I. Kavalerov et al., “Universal sound separation,” in IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), 175–179, 2019.

Z. Ye et al., “Sound Event Detection Transformer: An event-based end-to-end model for sound event detection,” arXiv.org, Nov. 11, 2021. https://arxiv.org/abs/2110.02011

J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li and L. Fei-Fei, “ImageNet: A large-scale hierarchical image database,” IEEE Computer Vision and Pattern Recognition (CVPR), 2009.

K. He, X. Zhang, S. Ren and J. Sun, “Deep residual learning for image recognition,” 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 2016, pp. 770-778.

J. F. Gemmeke et al., “Audio Set: An ontology and human-labeled dataset for audio events,” 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 2017, pp. 776-780.

Q. Kong, Y. Cao, T. Iqbal, Y. Wang, W. Wang, and M. D. Plumbley, “PANNs: Large-scale pretrained audio neural networks for audio pattern recognition,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 28, pp. 2880–2894, 2020.

B. Rocha et al., “An open access database for the evaluation of respiratory sound classification algorithms,” Physiological Measurement, 2019.

T. Sainburg and T. Q. Gentner, “Toward a Computational Neuroethology of Vocal Communication: From Bioacoustics to Neurophysiology, Emerging Tools and Future Directions,” Frontiers in Behavioral Neuroscience, vol. 15, 2021.

Z. Ren, R. Yeh, and A. Schwing, “Not all unlabeled data are equal: Learning to weight data in semi-supervised learning,” in Neural Information Processing Systems, 2020.

Additional Project Information

Research Plan:

Introduction and Problem Definition

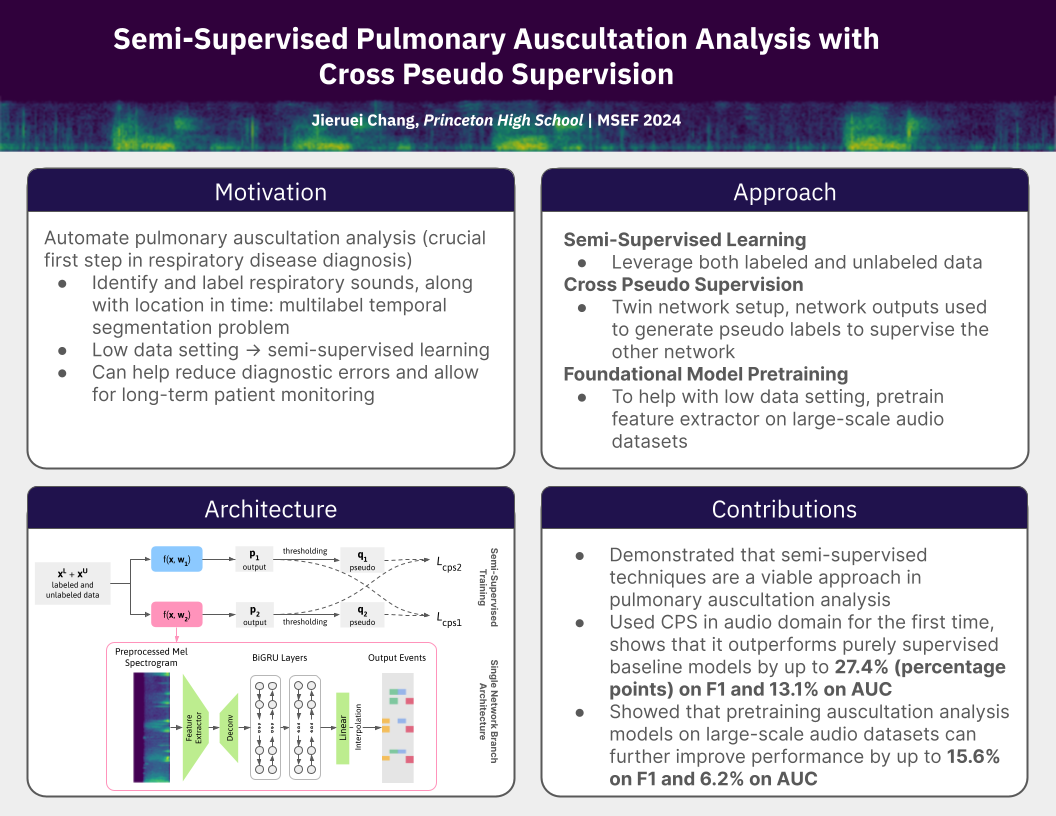

Pulmonary auscultation, or the act of listening to lung sounds with a stethoscope, provides vital information to aid in respiratory disease diagnosis by detecting the inhalation-exhalation cycle as well as abnormalities such as crackles, rhonchi, stridor, or wheezes (Sarkar 2015). Auscultation is non-invasive, making it a very safe procedure; but despite the simplicity of the method, it is effective because respiratory diseases directly affect how air passes through the lungs (for example by blocking or contracting airways), so the sounds produced vary as a direct byproduct of the underlying condition. It has been shown that pneumonia resulting from COVID-19 show distinctive auscultation characteristics (Wang 2020); in addition, a review of 28 studies involving a total of 2,032 patients showed that these respiratory sounds are useful indicators of respiratory illnesses such as asthma, cystic fibrosis, and bronchiolitis (Abreu 2021).

Although there have been great advances in healthcare throughout the last century, pulmonary auscultation largely still requires the expertise of medical professionals, due to the high degree of variability present in lung recordings and the difficulty in distinguishing normal from abnormal sounds. In recent decades, electronic stethoscopes such as the Littmann 3200 have been developed for long-duration auscultation to aid in patient monitoring, but they still require human expertise for analysis; additionally, as physicians may not have the time or auditory acuity to properly analyze the recordings produced, manual analysis can lead to incorrect diagnoses of respiratory diseases and significantly impact patient outcomes (Heitmann 2023).

This motivates the development of machine learning models that can automate the process of detecting adventitious sounds in electronic stethoscope recordings. This is not a traditional single-label classification problem, as sound labels can overlap and the temporal position of these sounds is important. This is because different diseases cause adventitious sounds to appear in differing positions in the inhalation-exhalation cycle. For example, crackle sounds occurring in the middle of the inhalation phase are a symptom of interstitial lung fibrosis and pneumonia, while crackle sounds appearing at the beginning of inhalation and during exhalation are a symptom of chronic bronchitis. Together, this makes this a multi-label temporal segmentation problem.

In addition, traditional machine learning models require large datasets of labeled data. While lung sound recordings are relatively easy to obtain in a hospital setting, labeling the recordings requires the expertise of medical experts and large amounts of annotation time. Therefore, I will apply semi-supervised techniques to this problem by training models on a small set of labeled auscultation data and a larger set of unlabeled data, to show the viability of such an approach in lung auscultation analysis.

Procedures

- Obtain dataset from public sources and build preprocessing pipeline (log-mel spectrograms and noise reduction routines) to convert raw audio into more readily analyzable spectrogram data.

- Shuffle dataset to ensure data homogeneity while taking care to prevent cross-contamination between train, test, and validation sets.

- Set up machine learning environment. A secondhand HP Z840 server with an NVIDIA RTX 3090 GPU will be used to train models. PyTorch will be used to implement the network architectures and Librosa will be used for audio data processing. CUDA and cuDNN will be used for deep learning GPU acceleration.

- Train models of the proposed network architecture over four different partitions of labeled and unlabeled data: 1/2, 1/4, 1/8, and 1/16. Implement models using the PyTorch framework. Initialize convolutional and linear layers with Kaiming initialization and BiGRUs with orthogonal initialization. Initialize pretrained models, the feature extractor with the weights of a network pretrained on 8kHz data from AudioSet. Use the AdamW optimizer with a weight decay of 10^-3, a polynomial learning rate (initialized at 10^-4) with power 0.9, and a batch size of 16. Because Cross Pseudo Supervision generally makes the model training less stable, start CPS model training with 50 epochs of non-CPS training, then take the model with the best sum of F1 scores on the validation set to continue with 50 epochs of CPS training using both labeled and unlabeled training data.

- Train baseline models (same architecture as one branch of the semi-supervised network, but trained using only supervised data) and conduct ablation study to show the impact of each network component.

Calculate quantitative metrics for each model using F1-score and AUC (detailed in Performance Evaluation section below).

Data Analysis/Performance Evaluation

The resulting machine learning models will be evaluated using quantitative and qualitative metrics. In addition, an ablation study will be conducted to test the effect of adding each individual component of the full neural network.

Quantitative:

For each model, AUC and F1 will be calculated for each of the four classes: inhalation, exhalation, continuous adventitious sound (CAS), and discontinuous adventitious sound (DAS). The train/test split provided by the dataset authors will be used, so there is no cross-contamination between training and testing data (i.e., each patient’s data is present only in the training or in the testing set). 10% of the total training data will be used for validation, with care also taken to avoid cross-contamination. In total, 2,929 recordings (labeled and unlabeled) will be used for training, 325 for validation, and 1,250 for evaluation.

Qualitative:

Visualizations of model outputs will be analyzed and interpreted. The effect of semi-supervised learning will be determined by comparing performance degradation (as the amount of labeled data decreases) in the CPS semi-supervised models against the fully-supervised baseline models.

Ablation Study:

Using 1/8 of the labeled data, four models will be trained that incrementally add the components of the full system, namely the CNN backbone, the BiGRU classifier, large-scale audio pretraining, and semi-supervised learning with CPS. These models will be evaluated with F1 and AUC metrics to show the positive effect of each component on the model performance.

Risk and Safety

This is a purely software-based project and therefore the risk of injury is minimal.

Questions and Answers

1. What was the major objective of your project and what was your plan to achieve it?

The major objective of this project is to pave the path towards more performant automated audio-based respiratory diagnosis systems, by demonstrating the merit of semi-supervised learning in the problem of pulmonary auscultation analysis. This objective was accomplished by developing, training, and testing neural networks that leverage unlabeled data using the semi-supervised learning technique of Cross Pseudo Supervision. The networks were trained on different partitions of labeled and unlabeled data (1/2, 1/4, 1/8, and 1/16 of labels used), and compared to baseline models that were trained without semi-supervised learning.

a. Was that goal the result of any specific situation, experience, or problem you encountered?

I had an irrational fear of the COVID antigen testing kits that required sticking a swab uncomfortably high into the nasal cavities (not helped by sensational news reports of people getting cerebral hemorrhages from poking too hard), and was inspired to find a less invasive method. With previous experience working with machine learning systems in the audio domain, I wondered if it was possible to apply those skills in some way to help diagnose respiratory illnesses like COVID.

b. Were you trying to solve a problem, answer a question, or test a hypothesis?

After surveying the literature, I found that there was prior work in the development of systems to automate the analysis of stethoscope lung sound recordings. However, I discovered that existing datasets for pulmonary auscultation analysis were relatively small, and often only had samples from a few dozen patients. Given that the small sample sizes can be partially attributed to the difficulty in annotation, I decided to test if using unlabeled data could also help to improve model performance.

2. What were the major tasks you had to perform in order to complete your project?

I conducted the entire research process from ideation to conclusion. I developed the research topic largely independently after a suggestion from my research mentor to look into topics relating to audio/signal processing. I surveyed the existing literature and found that existing datasets for pulmonary auscultation analysis were quite small, which led to my idea of applying semi-supervised techniques to this topic.

I designed the network architectures and training procedures. I used Cross Pseudo Supervision because of its promising performance in semantic segmentation; I decided to use a pretrained audio foundation model trained on the large-scale AudioSet as my feature extractor, giving it a general understanding of audio data; I also designed my network with bidirectional recurrent layers to improve its contextualization abilities. While the techniques themselves were developed by others, I significantly modified them to better suit my problem setting.

Since my proposed approach combines several different ideas into one system, I conducted an ablation study to show the positive effect of each component. In addition, I used standardized evaluation techniques to determine the performance of both my approach and a baseline approach over different ratios of labeled and unlabeled data. My analyses showed that my proposed models significantly outperform purely supervised models, with the improvement becoming more drastic as the amount of labeled data decreases.

a. For teams, describe what each member worked on.

N/A

3. What is new or novel about your project?

a/b. Is there some aspect of your project's objective, or how you achieved it that you haven't done before? Is your project's objective, or the way you implemented it, different from anything you have seen?

a/b) While there is a small amount of prior work in semi-supervised lung sound analysis, they are more limited than my proposed approach in two main ways: (1) they use labeled and unlabeled data separately (for example, by using unlabeled data to train a feature extractor in an unsupervised manner and then using labeled data to train a classifier with supervised learning), and (2) they only report clip-wise results. My proposed approach uses labeled and unlabeled data in tandem, so the model is less limited and can more easily understand relationships between both sets of data. My problem is also a multi-label temporal segmentation problem rather than a simple classification problem; my approach can label everything, everywhere, all at once: it gives the position and duration of every sound event in the clip, even when events overlap. This is important because the temporal position of sound events is important in disease diagnosis: crackle sounds occurring in the middle of the inhalation phase are a symptom of interstitial lung fibrosis and pneumonia, while crackle sounds appearing at the beginning of inhalation and during exhalation are a symptom of chronic bronchitis.

c. If you believe your work to be unique in some way, what research have you done to confirm that it is?

I conducted a thorough literature review prior to starting to analyze past efforts in lung auscultation analysis. These results are detailed in my technical paper.

4. What was the most challenging part of completing your project?

a. What problems did you encounter, and how did you overcome them?

When I first implemented the neural network architecture used in this project, I had already determined or designed all of the components: the CNN feature extractor, the foundation model pretraining, the BiGRU temporal classifier, and the twin-network CPS architecture. Therefore, I tried to build the entire system in one shot, and it performed poorly. But which one of these components was broken? Which ones needed to be tuned? With such a complex system, it was difficult to locate the problems and debug the network, especially given the black-box nature of AI. Instead, I started with a very simple architecture (a basic CNN), then slowly built up the model until I had implemented all the components in my original plan. Each time I introduced more complexity, I tested the models to ensure improvement.

b. What did you learn from overcoming these problems?

Research isn’t blindly throwing darts at a wall and hoping something hits; I’ve learned that it is a very incremental process. You can’t start with a complex system and expect it to work on the first try; each piece needs to be integrated one at a time.

Because of this, research takes time; I conducted over 500 machine learning experiments over the course of six months to produce viable results. But investing more time into a particular research direction doesn’t always equate to functional results. One idea I tried to implement involved a hybrid of two semi-supervised methods (Mean Teacher and Cross Pseudo Supervision), but despite its theoretical superiority over both methods, it did not perform well experimentally. However, even after reaching dead ends, I’ve learned to pick up the pieces, keep going, and eventually make some novel contributions.

5. If you were going to do this project again, are there any things you would do differently the next time?

I ran hundreds of individual machine learning experiments through the course of this research project. I recorded the results in a spreadsheet, but this was not especially conducive when it came to comparing different models: there are many variables that could change between runs, and even small changes in the code (which were not always reflected in the spreadsheet) could cause differing results. Thus, if I were to do a similar machine learning project in the future, I would start off by building a comprehensive testing suite that would allow easier comparison between different models. At the start of each training session, it could take a snapshot of all the model hyperparameters and code for future reference, and store the results automatically. This would make the performance evaluation steps more streamlined, facilitating easier cross-comparison and interpretation of model results.

6. Did working on this project give you any ideas for other projects?

Since the results show the viability of using unlabeled data in pulmonary auscultation analysis, the next step is to generate much larger unlabeled auscultation datasets in order to further improve the performance of auscultation analysis systems. The current model architecture could be extended with more advanced approaches, such using transformer models instead of the current BiGRU layers to capture more relationships in the time series data. However, transformers require a larger amount of training data, which is one way that larger unlabeled datasets could be leveraged. The semi-supervised training procedure could be made more stable by training the two branches against exponential moving averages (rather than the branches themselves), or by using reweighting approaches that disregard poor pseudo labels during the unsupervised learning phase. Once these systems are sufficiently reliable, additional models could be developed to determine the illness itself from the detected sound events.

The same concept of semi-supervised audio analysis could be applied to different domains. One immediate and closely related problem is in detecting diseases from tracheal or cardiovascular sounds. Similar architectures could potentially be used in contexts outside of the medical field, such as audio-based industrial monitoring.

7. How did COVID-19 affect the completion of your project?

Given that my project is software-based and occurred largely after the conclusion of the pandemic, COVID-19 did not have a significant impact on my project. However, the diagnosis of respiratory illnesses such as COVID was one of the motivating factors of my research.